The Unseen Factors Fueling AI’s Creativity

The initial version of this article was featured in Quanta Magazine.

Previously, we were promised self-driving vehicles and automated helpers. Instead, we’ve witnessed the emergence of artificial intelligence systems that excel in chess, sift through vast amounts of text, and even craft sonnets. This has been one of the significant revelations of contemporary times: tasks easy for humans are surprisingly challenging for robots, while algorithms increasingly replicate our cognitive abilities.

Another intriguing mystery for researchers is the algorithms’ unique capacity for a peculiar form of creativity.

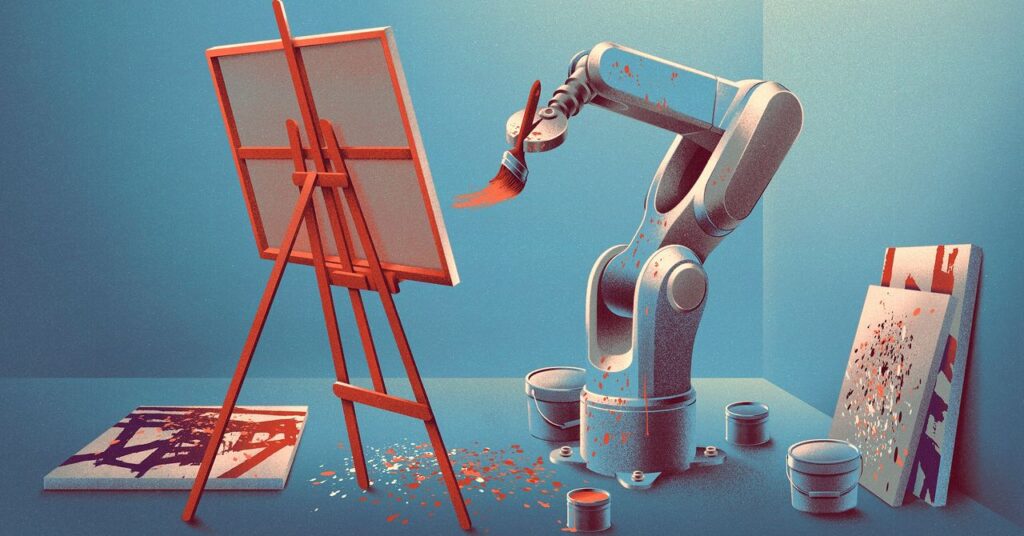

Diffusion models, the foundation of image-generating tools such as DALL·E, Imagen, and Stable Diffusion, are intended to produce exact copies of the images they are trained on. However, in practice, they appear to experiment, merging elements within images to generate something original—not merely nonsensical splashes of color, but coherent images with meaningful context. This creates a “paradox” surrounding diffusion models, as noted by Giulio Biroli, an AI researcher and physicist at the École Normale Supérieure in Paris: “If they functioned perfectly, they would merely memorize,” he stated. “However, they don’t—they can actually create new samples.”

To create images, diffusion models utilize a process called denoising. They transform an image into digital noise (a disordered collection of pixels) before reconstructing it. It’s akin to repeatedly running a painting through a shredder until only fine dust remains, then piecing it back together. For years, researchers have pondered: If the models are merely reassembling, how does innovation emerge? It’s comparable to reconstructing your shredded painting into an entirely new artwork.

Recently, two physicists made a surprising assertion: the flaws in the denoising process itself contribute to the creativity of diffusion models. In a paper presented at the International Conference on Machine Learning 2025, they formulated a mathematical model of trained diffusion models to demonstrate that what is deemed their creativity is, in fact, a deterministic process—a direct and unavoidable result of their design.

By shedding light on the complexities of diffusion models, this new research could have significant repercussions for future AI studies—and potentially for our comprehension of human creativity. “The genuine strength of the paper is in its precise predictions of something quite intricate,” remarked Luca Ambrogioni, a computer scientist at Radboud University in the Netherlands.

Bottoms Up

Mason Kamb, a graduate student pursuing applied physics at Stanford University and the primary author of the recent paper, has long been intrigued by morphogenesis: the processes whereby living systems self-organize.

One way to comprehend the development of embryos in humans and other animals is through what is referred to as a Turing pattern, named in honor of the 20th-century mathematician Alan Turing. Turing patterns illustrate how groups of cells can arrange themselves into distinct organs and limbs. Importantly, this coordination occurs locally. There’s no central authority directing the trillions of cells to ensure they adhere to a final body plan. In essence, individual cells lack a definitive blueprint of a body; they are simply responding to signals from neighbors. This bottom-up system typically functions smoothly, but occasionally it misfires—resulting in instances like hands with extra fingers.