Can AI Steer Clear of the Degradation Dilemma?

I recently took a trip to Italy. Like many travelers these days, I consulted GPT-5 for ideas on sightseeing and places to eat. The bot suggested a dinner spot just a quick walk away from our hotel in Rome down Via Margutta. It turned out to be one of the most memorable meals I’ve had. Upon returning home, I inquired about how the model arrived at that recommendation, though I hesitate to share the name in case I want to book a reservation again in the future (Who knows if I’ll return: The place is called Babette. Be sure to call ahead for a table.) The response was intricate and impressive. Key factors included enthusiastic local reviews, mentions in food blogs and Italian media, and the restaurant’s renowned fusion of traditional Roman and modern cuisine. Oh, and the proximity.

I needed to offer something as well: trust. I had to embrace the notion that GPT-5 was a fair arbiter, recommending my dining option without bias; that the restaurant wasn’t simply shown as sponsored content and wasn’t benefiting financially from my choice. While I could have conducted in-depth research to verify the suggestion (I did check out the website), the purpose of using AI is to avoid that hassle.

This experience boosted my faith in AI outcomes but also raised a question: As companies like OpenAI grow stronger and aim to repay their investors, will AI be susceptible to the same value erosion that seems inherent in the tech platforms we currently utilize?

Word Play

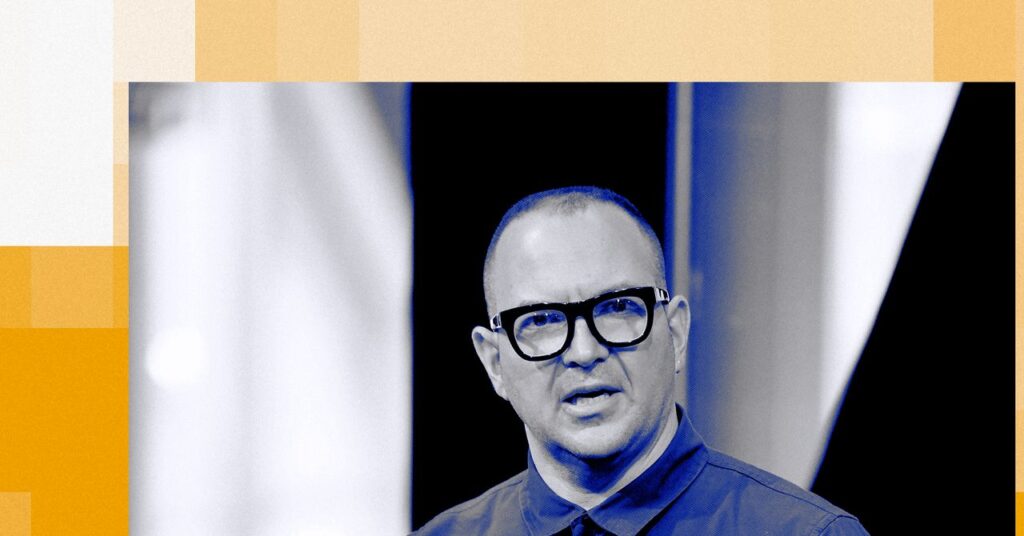

Writer and tech critic Cory Doctorow labels that erosion “enshittification.” His assertion is that platforms such as Google, Amazon, Facebook, and TikTok initially strive to please users, but once they eliminate competition, they purposefully become less effective to maximize profits. After WIRED reprinted Doctorow’s groundbreaking 2022 essay on this trend, the term gained traction, largely because people recognized its accuracy. Enshittification was selected as the American Dialect Society’s 2023 Word of the Year. The concept has been referenced so frequently that it transcends its crude roots, appearing in places that would typically avoid such vocabulary. Doctorow has just released a book on the subject; the cover image features the emoji for … you guessed it.

If chatbots and AI agents fall victim to enshittification, the implications could be graver than Google Search losing its effectiveness, Amazon inundated with ads, or Facebook prioritizing less meaningful social content over clickbait that incites outrage.

AI is on a trajectory to become a constant companion, providing quick answers to a range of inquiries. People already depend on it for understanding current events and guidance on various purchasing decisions—and even personal choices. Given the substantial expenses of developing a comprehensive AI model, it’s reasonable to assume that only a handful of companies will dominate the landscape. All intend to invest hundreds of billions of dollars in the coming years to enhance their models and distribute them to as many users as possible. Currently, I’d describe AI as being in what Doctorow refers to as the “good to the users” phase. However, the pressure to recoup enormous capital investments will be immense—especially for those firms with a locked-in user base. Such dynamics, as Doctorow notes, could lead companies to exploit their users and business clients “to reclaim all the value for themselves.”

When envisioning the enshittification of AI, advertising is often the first concern that arises. The ultimate fear is that AI models will make suggestions based on which companies have paid for visibility. This isn’t occurring yet, but AI companies are actively investigating the ad space. In a recent interview, OpenAI CEO Sam Altman stated, “I believe there probably is some cool ad product we can do that is a net win to the user and a sort of positive to our relationship with the user.” Meanwhile, OpenAI just announced a partnership with Walmart, allowing the retailer’s customers to shop within the ChatGPT app. Can’t foresee any conflicts there! The AI search platform Perplexity has a feature where sponsored results are included in clearly labeled follow-ups. But it promises, “these ads will not change our commitment to maintaining a trusted service that provides you with direct, unbiased answers to your questions.”